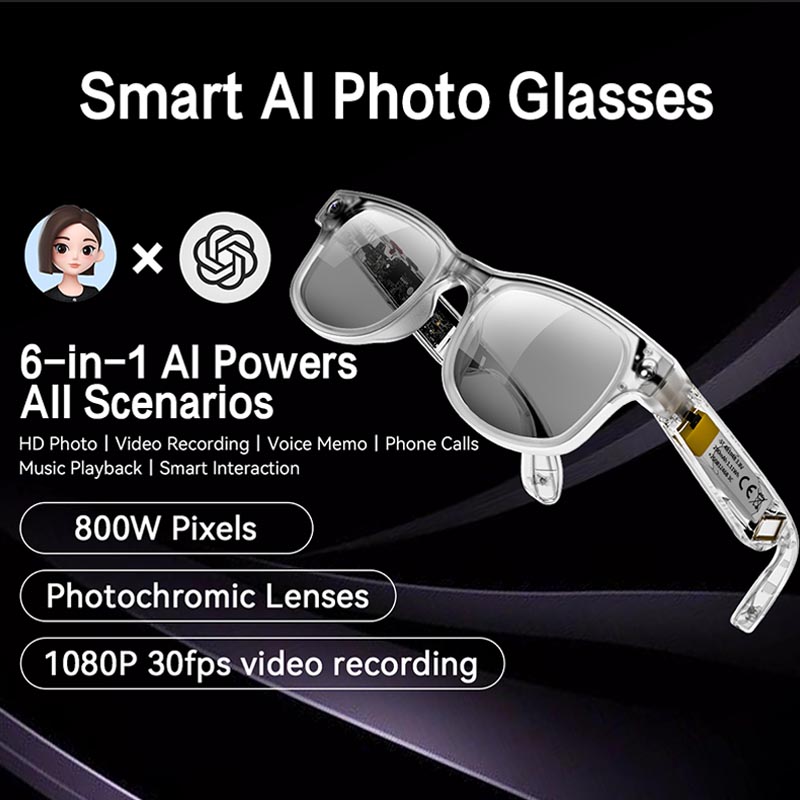

In recent years, smart glasses have moved from concept-to-reality: what once seemed futuristic is now entering the mainstream. With features such as built-in cameras, microphones, heads-up displays, and AI integration, these devices (often called AI smart glasses or simply smart glasses) open a wide world of possibilities. But with that promise comes a key question: Are smart glasses legal?

The answer is: yes—but it’s conditional. The legality depends heavily on how you use them, where you are, what features are activated, and whether you comply with applicable laws, corporate policies, and ethical norms.

For a company like Wellypaudio—whose core competency lies in customization and wholesale of cutting-edge audio/AI wearables—understanding the legal and regulatory environment around smart glasses is vital. Whether your customers integrate audio-only smart glasses or full AR/AI smart eyewear, you’ll want to know the risk-areas, best practices, and proactive strategies to reduce legal exposure.

Let’s break down the major legal fronts affecting smart glasses: privacy and recording laws, intellectual property and corporate policy issues, safety and distracted-use regulation, and a global/regional regulatory view. Finally, we’ll draw out practical steps for wearable-device makers, integrators, and wholesalers.

1. What makes smart glasses legally interesting?

On the surface, a pair of ordinary eyeglasses is completely unremarkable from a legal perspective. But when you add features such as cameras, microphones, sensors, connectivity (Bluetooth/WiFi), heads-up displays, and AI-powered functionality, you move into a zone of potential legal complexity.

As one article succinctly states:

“At its core, a standard pair of eyeglasses is legally benign. The issues arise from the features that make them ‘smart’: cameras, microphones, sensors, displays, and connectivity.”

In other words: smart glasses become more than fashion—they become a data-capture device, a display interface, a recording tool, and potentially a surveillance instrument. That capability triggers intersection with privacy law, audio/video-recording statutes, intellectual property, safety regulation, corporate policy, and more.

For example:

● The camera can record video or photos of people, places, and events.

● The microphone can capture audio of conversations (which may implicate wiretap/eavesdropping laws).

● A display/AR overlay may impact safety (for example, if used while driving).

● When connected to cloud/AI services, data may be transmitted, stored, processed—raising data protection concerns.

● In a business or industrial setting, the device may inadvertently capture trade secrets or restricted processes.

● In a social or public setting, unauthorized recording may violate rights of publicity or constitute voyeurism/hidden-camera crime.

Thus, the legality of smart glasses does not reduce to wearing them is fine. The details of use case, location, features activated, and consent/notice matter critically.

2. Privacy & Recording Laws: The Main Legal Frontier

(a) Audio & Video capture in the U.S.

One of the most legally sensitive uses of smart glasses is recording audio and/or video — especially when this is done without the explicit knowledge of others. Many legal issues stem from wiretapping/eavesdropping statutes and the expectation of privacy rules.

In the U.S., the federal law is the Wiretap Act, but most of the nuance lies in state-level consent laws.

A key distinction:

● One‐party consent states: only one person in the conversation needs to consent to the recording (and that person may be the one doing the recording).

● All-party consent states (sometimes “two-party consent”): every party to the conversation must consent to the recording.

For example, in California, Florida, Illinois, Pennsylvania, and Washington, you’re in an all-party consent jurisdiction. ([INAIRSPACE][2])

Thus, if someone wearing smart glasses records a private conversation in California without everyone’s consent, they may face criminal or civil liability.

Beyond audio, video captures also raise separate issues. Recording someone in a place where they have an expectation of privacy (e.g., restrooms, changing rooms, private homes) might constitute voyeurism or other criminal offense. As noted:

“While individuals have a reduced expectation of privacy in public spaces, using a device that can continuously and discreetly record raises novel questions.”

(b) Public vs private spaces

In public spaces (e.g., streets, parks), the expectation of privacy is low, so capturing video alone may be legally permissible. But hidden audio, or recording with the intent to broadcast, may still be problematic. As a tech‐law commentary noted:

“Recording people in public is generally legal. … Hidden recording is probably still legal. In some states, there is a right to publicity.”

Nevertheless, ethics and social acceptance come into play; just because something *can be legally recorded doesn’t mean it should be. And commercial use of someone’s image may trigger “right of publicity” issues.

(c) Wearables as “hidden cams.

Smart glasses blur the line between obvious cameras (a smartphone held up) and hidden cameras (glasses that look “normal” but record). Many commentators believe this creates elevated risk:

“The discreet nature of smart glasses makes it easier to violate these laws, increasing the legal risk for the user.”

As one piece states:

“Privacy laws can’t keep up with ‘luxury surveillance’. … The open‐fields doctrine and one-party consent statutes were not designed for modern wearable surveillance devices.”

From a manufacturer/wholesaler perspective, this means offering smart glasses (especially with recording/AI features) includes responsibility: providing clear user instructions, warnings about legal/regulatory risk, and perhaps designing consent/notification features (LED indicator, audible beep, etc).

3. Intellectual Property, Corporate/Workplace Policies & Safety

(a) Intellectual property and corporate risk

Smart glasses also raise IP and business-confidentiality concerns. As one write-up explains:

“Consider a user attending a live concert… The use of smart glasses to record a performance would likely constitute a breach of contract with the venue and a violation of copyright law, as the user is creating an unauthorized copy of the protected work.”

In a workplace or manufacturing setting, an employee wearing AI-smart glasses with cameras or streaming capability might inadvertently record trade secrets, proprietary manufacturing processes, board meetings, etc. That may violate confidentiality agreements or corporate policy.

Corporations are increasingly updating policies to ban or restrict the use of wearable recording devices in sensitive areas.

(b) Distracted use/safety regulation

Another dimension: safety laws relating to vehicle use or the operation of heavy machinery. Smart glasses may feature heads-up displays (HUDs), text/notification overlays, and navigation prompts. That raises regulatory questions: Is it safe (or legal) to use those while driving or operating equipment?

From the source:

“Some existing laws that prohibit watching a screen or displaying a monitor visible to the driver could be interpreted to cover smart glasses. … Until legislation is specifically written to address augmented reality displays, their use while driving will remain a legal gray area subject to interpretation.”

From a product-maker standpoint, if your smart glasses include AR overlays, you should flag that users should not use them while driving, or you must provide disclaimers and perhaps disable certain features when motion is detected.

(c) Corporate/workplace usage

For a company like Wellyp Audio supplying AI smart glasses, it's important to consider not only consumer use but enterprise/industrial use. Businesses may have internal policies banning wearable cameras in R&D labs, manufacturing floors, meetings, etc. Smart glasses with recording/AI must support enterprise governance (disable recording in certain zones, tether to corporate control, maintain audit logs).

4. Global/Regional Legal Perspectives

(a) European Union & data protection

Outside the U.S., data protection regulations add another layer. In the European Union, the General Data Protection Regulation (GDPR) enforces principles of data minimization, purpose limitation, lawful basis for processing, transparency, security, etc.

Smart glasses that record people, audio, location, and AI-identify them and send data to the cloud raise significant GDPR concerns: Are subjects being informed? Have they given consent? Is the processing necessary, proportionate? Is data protected?

(b) Other jurisdictions

In countries such as South Korea, there are strong Portrait Rights (초상권) laws that protect a person’s image and usage for commercial purposes, and secret filming is punishable.

In many parts of the world, the legal framework remains underdeveloped relative to the speed of wearable tech innovation. That means companies and users may face grey areas and uncertain liability.

(c) International travellers / cross-border issues

If you sell or distribute smart glasses globally, you must account for varying laws: U.S. state variation + the EU + Asia, the Middle East. A device legal in one jurisdiction may be problematic in another. Moreover, a user traveling abroad with your AI smart glasses may inadvertently breach local law. Advising your customers accordingly is prudent.

5. Best Practices for AI Smart Glasses Manufacturers, Wholesalers & Integrators

Since Wellyp Audio specializes in customization and wholesale of AI smart-glasses/audio-wearables, here is a tailored set of recommended practices:

• Design for transparency & consent

– Include a visible indicator (LED light) or audible cue when recording is active. This helps signal to bystanders that recording is occurring. Some smart glasses already have this built in.

– Provide a user-opt-out or clear disable for recording features.

– Include in product literature a user-compliance guide: Check local/state/country laws before recording and respect the privacy of others.

• Clear user-agreements & compliance documentation

– When you supply custom smart glasses, provide a terms-of-use/acceptance agreement or policy.

– Encourage your customers (wholesale & enterprise) to adopt internal policies around where/when recording is permitted.

– For enterprise clients, provide options: disable camera/mic, zone-based disable (e.g., manufacturing floor), audit-log features.

• Safety & regulatory disclaimers

– If your smart glasses support heads-up display, notifications, navigation overlays: include warnings about not using them while driving or operating heavy machinery.

– Consider software lock-outs (e.g., disabling display while speed > X km/h) or user-configurable “driving mode”.

• International compliance guidance

– Since you may distribute globally (including the UK, Europe, and Asia), provide customers with a summary of major regional legal landscapes: U.S. state consent laws, GDPR implications, Asia Pacific special rules (portrait rights, secret filming).

– Provide multilingual documentation if selling in the UK/EU (UK laws plus GDPR) and in major export markets.

• Branding & ethical positioning

– Frame the product not just as a recording device, but as AI smart glasses for productivity, accessibility, learning, translation, and audio enhancement (aligning with Wellyp Audio’s positioning). Emphasize ethical use.

– Encourage prominent user-education: Wear responsibly, Notify subjects when recording, Do not record in private spaces (restrooms, locker rooms).

• Certification & security

– Because the device may capture personal data (faces, audio), apply appropriate data-security practices: encryption of stored/transmitted data, secure firmware updates, secure cloud/API. This underpins legal risk mitigation (data breach liability).

– If useful, obtain or reference compliance badges (e.g., GDPR readiness, privacy‐by‐design statements) for marketing.

• Corporate/Enterprise integration

– For wholesale or enterprise clients, offer enterprise firmware mode: cameras/mics disabled by admin; restricted zones; audit logs; tether to corporate network.

– Provide training sessions or documentation for enterprise clients on corporate policy drafting around wearable use.

6. Summary: Are Smart Glasses Legal?

In short: Yes, smart glasses are legal in many circumstances, but not universally safe or risk-free. The legality depends on who, where, what features, consent, and jurisdiction. As the source article puts it:

“Ultimately, the question of whether smart glasses are legal is not a simple yes or no. It is a conditional yes, heavily dependent on context, location, and intent.”

From a business perspective (for Wellyp Audio and your customers), the best strategy is to assume legal risk exists and adopt a compliance-first mindset: design features that enable consent/visibility, provide clear documentation/training, carve out safe use-cases (non-recording audio/translation mode may carry less risk), and inform customers of major legal frameworks.

To recap the key risk areas:

● Recording audio/video without consent (especially in all-party consent states or private places).

● Recording in sensitive physical environments (lockers, bathrooms, changing rooms).

● Recording or streaming copyrighted performances without authorization.

● Corporate use in confidential environments risks IP leakage.

● Safety/distracted-use regulation (driving, heavy machinery).

● International data protection laws and regional filming/portrait rights.

On the positive side, smart glasses offer tremendous opportunities: AI translation, immersive productivity, hands-free AR experiences, accessibility enhancement, enterprise training, and remote assistance. The legal/ethical framework is catching up—but being proactive gives you a competitive advantage and risk mitigation.

7. Future Outlook & Strategic Implications

Looking ahead: legislation is still playing catch-up. According to recent analysis:

We can expect to see: specific bans in sensitive locations; clarification on driving laws; mandatory recording-indicator lights; enhanced data security requirements.

From a product-strategy perspective for Wellypaudio:

● Position your offering as “AI smart glasses with privacy-by-design” to differentiate from generic camera glasses.

● Highlight features like translation/interpretation, audio-enhancement, and enterprise training rather than surreptitious recording. This reduces legal and reputational risk.

● Offer customization: for clients who reside in jurisdictions with strict laws (EU, California, etc.), you can configure variants with disabled camera/mic, or recording-only when explicit consent is given.

● Build a partnership with legal/compliance: ensure your product manuals, user contracts, export compliance, and data-security architecture are aligned with major jurisdictions.

● Educate your channel (wholesale, retail) on the “responsible use” story: being mindful of social norms, consent, signage in public spaces, and business policy. This helps avoid brand damage from “smart glasses gone wrong”.

Finally, even though the legal landscape is complex and evolving, the technology wave is real. Smart glasses (especially AI-enabled ones) are going mainstream. By treating compliance as part of product design and brand positioning—rather than an afterthought—you turn from a gadget-maker into a trusted innovative partner in the wearable-AI ecosystem.

Ready to explore custom wearable smart glass solutions? Contact Wellypaudio today to discover how we can co-design your next-generation AI or AR smart eyewear for the global consumer and wholesale market.

Recommend Reading

Post time: Nov-28-2025