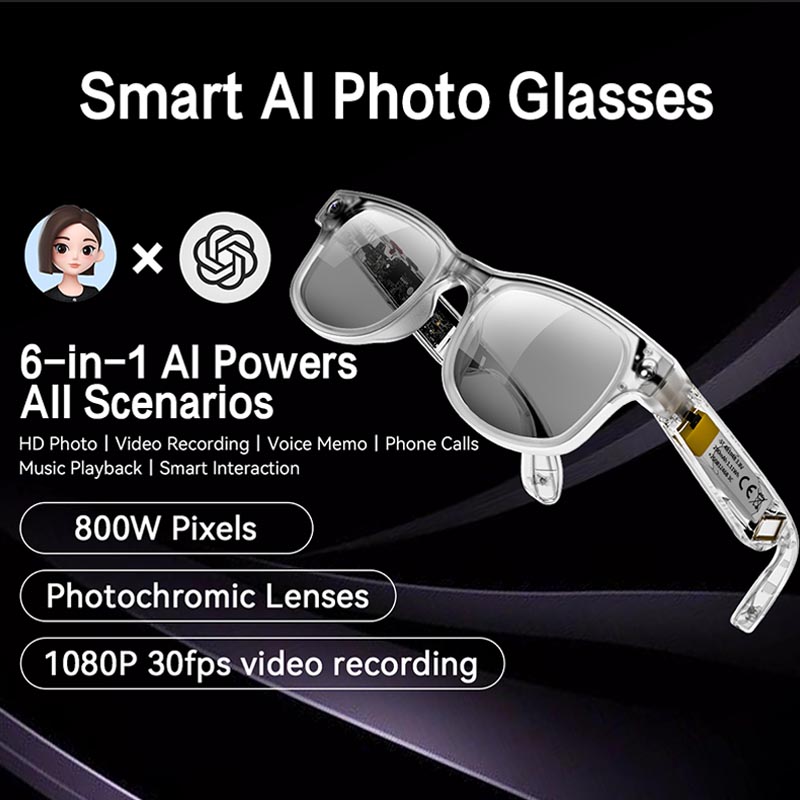

As wearable computing advances at breakneck speed, AI glasses are emerging as a powerful new frontier. In this article, we’ll explore how AI glasses work—what makes them tick—from the sensing hardware to the onboard and cloud brains, to how your information is delivered seamlessly. At Wellyp Audio, we believe understanding the technology is the key to making truly differentiated, high-quality AI eyewear (and companion audio products) for the global market.

1. The three-step model: Input → Processing → Output

When we say How it works: the tech behind AI glasses, the simplest way to frame it is as a flow of three stages: Input (how the glasses sense the world), Processing (how data is interpreted and transformed), and Output (how that intelligence is delivered to you).

Many of today’s systems adopt this three-part architecture. For example, one recent article states: AI glasses operate on a three-step principle: Input (capturing data via sensors), Processing (using AI to interpret data), and Output (delivering information via a display or audio).

In the following sections, we’ll break down each stage in depth, adding the key technologies, design trade-offs, and how Wellyp Audio thinks about them.

2. Input: sensing and connectivity

The first major phase of an AI-glasses system is gathering information from the world and from the user. Unlike a smartphone that you point and pick up, AI glasses aim to be always on, context-aware, and seamlessly integrated into your daily life. Here are the main elements:

2.1 Microphone array & voice input

A high-quality microphone array is a critical input channel. It allows voice commands (Hey Glasses, translate this phrase, What’s that sign say?), natural-language interaction, live captioning or translation of conversations, and environmental listening for context. For example, one source explains:

A high-quality microphone array … is designed to capture your voice commands clearly, even in noisy environments, allowing you to ask questions, take notes, or get translations.

From Wellyp’s perspective, when designing an AI glasses product with companion audio (e.g., TWS earbuds or over-ear plus glasses combo), we see the microphone subsystem as not only speech capture but also ambient audio capture for context awareness, noise suppression, and even future spatial sound features.

2.2 IMU and motion sensors

Motion sensing is essential for glasses: tracking head orientation, movement, gestures, and stability of overlays or displays. The IMU (inertial measurement unit)—typically combining accelerometer + gyroscope (and sometimes magnetometer)—enables spatial awareness. One article states:

An IMU is a combination of an accelerometer and a gyroscope. This sensor tracks your head’s orientation and movement. … This AI glasses technology is fundamental for features that require spatial awareness.” In Wellyp’s design mindset, the IMU enables:

● stabilization of any on-lens display when the wearer is moving

● gesture detection (e.g., nod, shake, tilt)

● environment awareness (when combined with other sensors)

● power-optimised sleep/wake detection (e.g., glasses removed/put on)

2.3 (Optional) Camera / Visual sensors

Some AI glasses include outward-facing cameras, depth sensors or even scene recognition modules. These enable computer-vision features like object recognition, translation of text in view, face recognition, environment mapping (SLAM) etc. One source notes:

Smart glasses for the visually impaired utilise AI for object and face recognition … the glasses support navigation through location services, Bluetooth, and built-in IMU sensors.

However, cameras add cost, complexity, power draw, and raise privacy concerns. Many devices opt for a more privacy-first architecture by omitting the camera and relying on audio + motion sensors instead. At Wellypaudio, depending on the target market (consumer vs enterprise), we may choose to include a camera module (e.g., 8–13MP) or omit it for lightweight, low-cost, privacy-first models.

2.4 Connectivity: linking to the smart-ecosystem

AI glasses are rarely fully standalone—rather, they are extensions of your smartphone or wireless audio ecosystem. Connectivity enables updates, heavier processing off-device, cloud features, and user app control. The typical links:

● Bluetooth LE: always-on low-power link to the phone, for sensor data, commands, and audio.

● WiFi / cellular tethering: for heavier tasks (AI model queries, updates, streaming)

● Companion App: on your smartphone for personalization, analytics, settings, and data review

From a Wellyp perspective, integration with our TWS/over-ear ecosystem means seamless switching between glasses + headphone audio, smart assistant, translation or ambient-listening modes, and firmware updates over the air.

2.5 Summary – why Input matters

The quality of the input subsystem sets the stage: better microphones, cleaner motion data, robust connectivity, thoughtful sensor fusion = better experience. If your glasses mishear commands, mis-detect head movement, or lag due to connectivity issues, the experience suffers. Wellyp emphasises input subsystem design as a foundation for high-end AI glasses.

3. Processing: on-device brains & cloud intelligence

Once the glasses have gathered input, the next phase is to process that information: interpret voice, identify context, decide what response to give, and prepare output. This is where the “AI” in AI glasses takes centre stage.

3.1 On-device computing: System-on-Chip (SoC)

Modern AI glasses include a small but capable processor—often called a system-on-chip (SoC) or dedicated microcontroller/NPU—that handles always-on tasks, sensor fusion, voice keyword detection, wake-word listening, basic commands, and low-latency local responses. As one article explains:

Every pair of AI glasses contains a small, low-power processor, often called a System on a Chip (SoC). … This is the local brain, responsible for running the device’s operating system—managing the sensors and handling basic commands.

Wellyp’s design strategy includes choosing a low-power SoC that supports:

● voice keyword/wake-word detection

● local NLP for simple commands (e.g., “What’s the time?”, “Translate this sentence”)

● sensor fusion (microphone + IMU + optional camera)

● connectivity and power-management tasks

Because power and form-factor are critical in eyewear, the on-device SoC must be efficient, compact, and generate minimal heat.

3.2 Hybrid local vs cloud AI processing

For more complex queries—e.g., Translate this conversation in real time, Summarize my meeting”, “Identify this object”, or “What’s the best route to avoid traffic?”—the heavy lifting is done in the cloud where large AI models, neural networks, and large compute clusters are available. The trade-off is latency, connectivity requirements, and privacy. As noted:

A key part is deciding where to process a request. This decision balances speed, privacy, and power.

● Local processing: Simple tasks are handled directly on the glasses or on your connected smartphone. This is faster, uses less data, and keeps your information private.

● Cloud processing: For complex queries that require advanced generative AI models … the request is sent to powerful servers in the cloud. … This hybrid approach allows for powerful AI glasses function without requiring a massive, power-hungry processor inside the frames.

Wellyp’s architecture sets up this hybrid processing model as follows:

● Use local processing for sensor fusion, wake-word detection, basic voice commands, and offline translation (small model)

● For advanced queries (e.g., multi-language translation, image recognition (if camera present), generative responses, contextual suggestions), send to cloud via smartphone or WiFi.

● Ensure data encryption, minimal latency, fallback offline experience, and user-privacy-oriented features.

3.3 Software ecosystem, companion app & firmware

Behind the hardware lies a software stack: a lightweight OS on the glasses, a companion smartphone app, cloud backend, and third-party integrations (voice assistants, translation engines, enterprise APIs). As one article describes:

The final piece of the processing puzzle is the software. The glasses run a lightweight operating system, but most of your settings and personalization happen in a companion app on your smartphone. This app acts as the command centre—allowing you to manage notifications, customise features, and review information captured by the glasses.

From Wellyp’s standpoint:

● Ensure firmware updates OTA (over-the-air) for future features

● Allow the companion app to manage user preferences (e.g., language translation preferences, notification types, audio tuning)

● Provide analytics/diagnostics (battery usage, sensor health, connectivity status)

● Maintain robust privacy policies: data only leaves the device or smartphone under clear user consent.

4. Output: delivering information

After input and processing, the final piece is output—how the glasses deliver intelligence and feedback to you. The goal is to be seamless, intuitive, and minimally disruptive to your primary tasks of seeing and hearing the world.

4.1 Visual output: Head-Up Display (HUD) & waveguides

One of the most visible technologies in AI glasses is the display system. Rather than a big screen, wearable AI glasses often use a transparent visual overlay (HUD) through projection or waveguide technology. For example:

The most noticeable AI smart glasses feature is the visual display. Instead of a solid screen, AI glasses use a projection system to create a transparent image that appears to float in your field of view. This is often achieved with micro-OLED projectors and waveguide technology, which guides light across the lens and directs it toward your eye.

A useful technical reference: companies such as Lumus specialise in waveguide optics used for AR/AI glasses.

Key considerations for Wellyp in designing the optical output system:

● Minimal obstruction of real-world view

● High brightness and contrast so overlay remains visible in daylight

● Thin lens/frames to maintain aesthetic and comfort

● Field-of-view (FoV) balancing readability vs wearability

● Integration with prescription lenses when needed

● Minimal power consumption and heat generation

4.2 Audio output: open-ear, bone-conduction, or in-temple speakers

For many AI glasses (especially when no display is present), audio is the primary channel for feedback—voice responses, notifications, translations, ambient listening, etc. Two common approaches:

● In-temple speakers: small speakers embedded in the arms, directed toward the ear. Mentioned in one article:

For models without built-in display, audio cues are used … typically done through small speakers located in the arms of the glasses.

● Bone-conduction**: transmits audio through skull bones, leaving ear canals open. Some modern wearables use this for situational awareness. For example:

Audio & Mics: Audio is delivered via dual bone conduction speakers …

From Wellyp’s audio-centric perspective, we emphasize:

● High-quality audio (clear speech, natural voice)

● Low latency for voice assistant interactions

● Comfortable open-ear design preserving ambient awareness

● Seamless switching between glasses and true wireless earbuds (TWS) or over-ear headphones that we manufacture

4.3 Haptic / vibration feedback (optional)

Another output channel, especially for discreet notifications (e.g., You have a translation ready) or alerts (low battery, incoming call) is haptic feedback via the frame or earpieces. While less common in mainstream AI glasses yet, Wellyp considers haptic cues as a complementary modality in product design.

4.4 Output experience: blending the real + digital world

The key is to blend digital information into your real-world context without pulling you out of the moment. For example, overlaying translation subtitles while you speak with someone, showing navigation cues in the lens while walking, or giving audio prompts while you’re listening to music. Effective AI glasses output respects your environment: minimal distraction, maximum relevance.

5. Power, battery and form-factor trade-offs

One of the biggest engineering challenges in AI glasses is power management and miniaturisation. Lightweight, comfortable eyewear cannot house the large batteries of smartphones or AR headsets. Some key considerations:

5.1 Battery technology & embedded design

AI glasses often use custom-shaped lithium-polymer (LiPo) batteries embedded in the arms of the frames. For example:

AI glasses use custom-shaped, high-density Lithium-Polymer (LiPo) batteries. These are small and lightweight enough to be embedded into the arms of the glasses without adding excessive bulk or weight.([Even Realities][1])

Design trade-offs for Wellyp: battery capacity vs weight vs comfort; trade-offs in runtime vs standby; heat dissipation; frame thickness; user-replaceability vs sealed design.

5.2 Battery life expectations

Because of size constraints and always-on features (microphones, sensors, connectivity), battery life is often measured in hours of active use rather than a full day of heavy tasks. One article notes:

Battery life varies depending on usage, but most AI glasses are designed to last for several hours of moderate use, which includes occasional AI queries, notifications, and audio playback.

Wellyp’s target: design for at least 4–6 hours of mixed usage (voice queries, translation, audio play) with a standby of a full day; in premium designs, push to 8+ hours.

5.3 Charging and accessory cases

Many glasses include a charging case (especially TWS-earbud hybrids) or a dedicated charger for eyewear. These can supplement the on-device battery, allow easier portability, and protect the device when not in use. Some designs in eyewear begin to adopt charging cases or cradle docks. Wellyp’s product roadmap includes an optional charging case for AI eyewear, especially when paired with our TWS products.

5.4 Form-factor, comfort and weight

Failing to design for comfort means the best AI glasses will sit unused. Essentials:

● Target weight ideally < 50g (for glasses only)

● Balanced frame (so arms don’t pull forward)

● Lens options: clear, sunglasses, prescription compatible

● Venting/heat-dissipation for processing module

● Style and aesthetics aligned with consumer preferences (glasses must look like glasses)

Wellyp works with experienced eyewear OEM partners to optimise form-factor while accommodating sensor, battery and connectivity modules.

6. Privacy, security and regulatory considerations

When designing AI glasses technology, the Input → Processing → Output chain must also address privacy, security and regulatory compliance.

6.1 Camera vs no-camera: privacy trade-offs

As mentioned, including a camera opens up a lot of potential (object recognition, scene capture) but also raises privacy concerns (recording of bystanders, legal issues). One article highlights:

Many smart glasses use a camera as a primary input. However, this raises significant privacy concerns … By relying on audio and motion inputs … it focuses on AI-driven assistance … without recording your surroundings.

At Wellyp, we consider two tiers:

● A privacy-first model with no outward-facing camera but high-quality audio/IMU for translation, voice assistant, and ambient awareness

● A premium model with camera/vision sensors, but with user-consent mechanisms, clear indicators (LEDs), and robust data-privacy architecture

6.2 Data security & connectivity

Connectivity means cloud links; this brings risk. Wellyp implements:

● Secure Bluetooth pairing and data encryption

● Secure firmware updates

● User consent for cloud features and data sharing

● Clear privacy policy, and the ability for the user to opt out of cloud features (offline mode)

6.3 Regulatory/safety aspects

Since eyewear can be worn while walking, commuting or even driving, the design must comply with local laws (e.g., restrictions on displays while driving). One FAQ notes:

Can you drive with AI glasses? This depends on local laws and the specific device.

Also, optical output must avoid obstructing vision, causing eye-strain or safety risk; audio must maintain ambient awareness; battery must meet safety standards; materials must comply with wearable electronics regulation. Wellyp’s compliance team ensures we meet CE, FCC, UKCA, and other applicable region-specific regulations.

7. Use-cases: what these AI glasses enable

Understanding the tech is one thing; seeing the practical applications makes it compelling. Here are representative use-cases for AI glasses (and where Wellyp is focusing):

● Real-time language translation: Conversations in foreign languages are translated on the fly and delivered via audio or visual overlay

● Voice assistant always-on: Hands-free queries, note-taking, reminders, contextual suggestions (like You’re near that café you liked)

● Live captioning/transcription: For meetings, lectures or conversations—AI glasses can caption speech in your ear or on the lens

● Object recognition & context awareness (with camera version): Identify objects, landmarks, faces (with permission), and provide audio or visual context

● Navigation & augmentation: Walking directions overlaid on lens; audio prompts for directions; heads-up notifications

● Health/fitness + audio integration: Since Wellyp specialises in audio, combining glasses with TWS/over-ear earbuds means seamless transition: spatial audio cues, environmental awareness, plus an AI assistant while listening to music or a podcast

● Enterprise/industrial uses: Hands-free checklists, warehouse logistics, field-service technicians with overlay instructions

By aligning our hardware, software and audio ecosystems, Wellyp aims to deliver AI glasses that serve both consumer and enterprise segments with high performance and seamless usability.

8. What differentiates Wellyp Audio’s vision

As a manufacturer specialising in customization and wholesale services, Wellyp Audio brings specific strengths to the AI glasses space:

● Audio + wearable integration: Our heritage in audio products (TWS, over-ear, USB-audio) means we bring advanced audio input/output, noise suppression, open-ear design, companion audio syncing

● Modular customization & OEM flexibility: We specialise in customization—frame design, sensor modules, colourways, branding—ideal for wholesale/B2B partners

● End-to-end manufacturing for wireless/bt ecosystem: Many AI glasses will pair with earbuds or over-ear headphones; Wellyp already covers these categories and can deliver a full ecosystem

● Global market experience: With target markets including the UK and beyond, we understand regional certification, distribution challenges, and consumer preferences

● Focus on hybrid processing & privacy: We align product strategy to the hybrid model (on-device + cloud) and offer configurable camera/no-camera variants for different customer priorities

In short: Wellyp Audio is positioned not just to produce AI glasses, but to deliver a wearables ecosystem around AI-assisted eyewear, audio, connectivity and software.

9. Frequently Asked Questions (FAQs)

Q: Do AI glasses need a constant internet connection?

A: No—for basic tasks, local processing suffices. For advanced AI queries (large models, cloud-based services) you’ll need connectivity.

Q: Can I use prescription lenses with AI glasses?

A: Yes—many designs support prescription or custom lenses, with optical modules designed to integrate different lens powers.

Q: Will wearing AI glasses distract me while driving or walking?

A: It depends. The display must be non-obstructive, audio should maintain ambient awareness, and local laws vary. Prioritise safety and check regulations.

Q: How long will the battery last?

A: It depends on usage. Many AI glasses aim for “several hours” of active use—involving voice queries, translation, audio playback. Standby time is longer.

Q: Are AI glasses just AR glasses?

A: Not exactly. AR glasses focus on overlaying graphics on the world. AI glasses emphasise intelligent assistance, context awareness and voice/audio integration. The hardware may overlap.

The technology behind AI glasses is a fascinating orchestration of sensors, connectivity, computing and human-centred design. From the microphone and IMU capturing your world, through the hybrid local/cloud processing interpreting data, to displays and audio delivering intelligence—this is how the smart eyewear of the future works.

At Wellyp Audio, we’re excited to bring this vision to life: combining our audio expertise, wearable manufacturing, customization capabilities and global market reach. If you’re looking to build, brand or wholesale AI-glasses (or companion audio gear), understanding How it works: the tech behind AI glasses is the essential first step.

Stay tuned for Wellyp’s upcoming product releases in this space — redefining how you see, hear and interact with your world.

Ready to explore custom wearable smart glass solutions? Contact Wellypaudio today to discover how we can co-design your next-generation AI or AR smart eyewear for the global consumer and wholesale market.

Recommend Reading

Post time: Nov-08-2025